Detecting Insider Threats

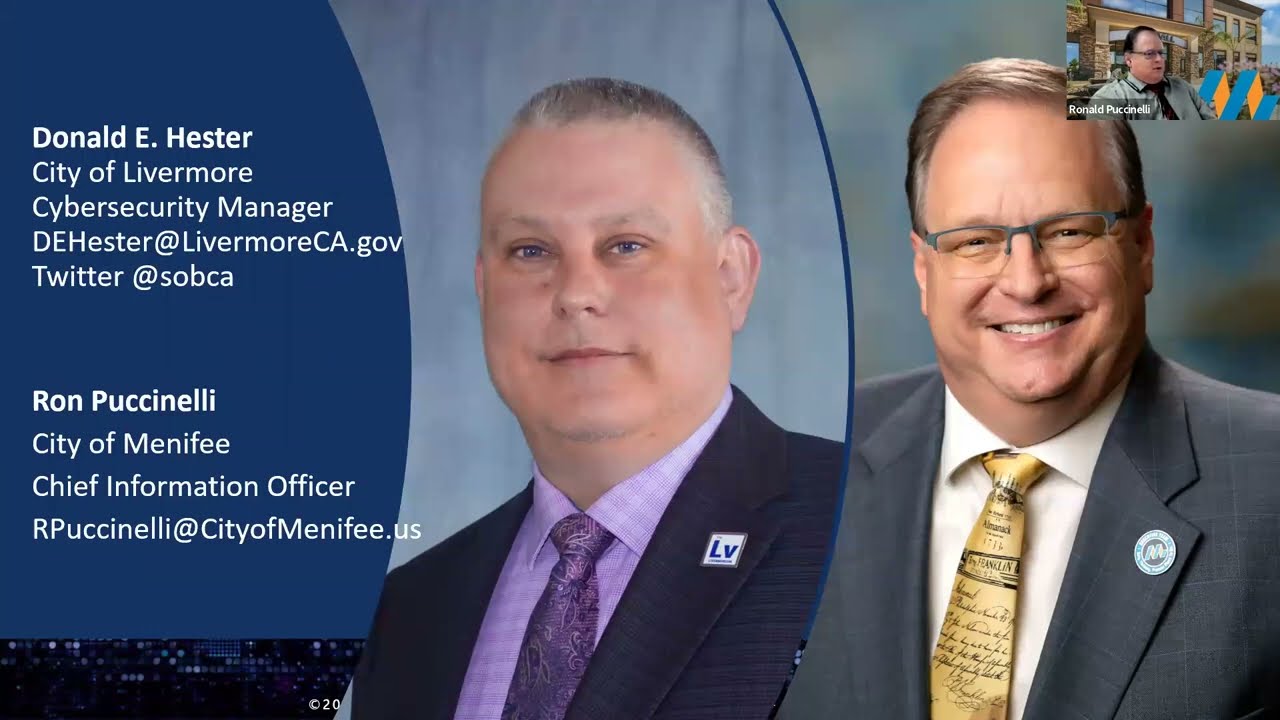

- Donald E. Hester

- Mar 2, 2017

- 3 min read

Insiders have long been one of the biggest security concerns for organizations. People are known to be the weakest link in the chain of security for organizations. For example, an employee can execute malicious software or code that can lead to a large-scale security incident. Security practitioners have traditionally focused on better technical controls like anti-malware software and end-point security. However, this is only one layer of protection.

Studies on ransomware have found that 80% organizations that have been hit by ransomware had anti-malware or anti-virus software installed. It is apparent we cannot rely solely on one control or one layer to protect a particular attack vector.

To address the insider threat, we need to not only focus in on education but also behavior modeling. Having automated systems or AI systems baseline typical user activity and flagging abnormal behavior for review will help detect an insider threat.

At the recent 2017 RSA Conference, I noticed some vendors offering insider threat tools. Most seemed to focus on monitoring social media monitoring or attempting to phish insiders with bogus emails. I want to see them move beyond this. I want to see a baseline of typical end user behavior and reporting on deviations by individual and groups. By groups, I mean typical behavior on systems for HR department would be different from that of the typical behavior of the accounting department. A baseline to eliminate false positives will be better if we have multiple cross sections of roles or departments overlayed along with individual behavior.

For behavior I am not talking about telling jokes, I am talking about behavior on computers or in systems. Typically ways people access files. If they normally only access files locally and then one day out of the blue, their account is used to access the files using a remote device. This type of behavior should raise a flag for review. However, this is just the lowest level. More sophisticated analytics should be used to evaluate based on position, department, the individual, times used, vector, applications used, types of activities done on systems, individual calendar events, and other factors.

Of course an active defense, whereby anomalous behavior is blocked until the user can be authenticated by an alternate process. However, we would need to test the systems for a period of time to determine a false positive and false negative rates can be determined.

You can see something like this used to prevent credit card fraud. Often when I travel, and I am in a new city my business credit card is put on hold until I contact the company because my behavior of buying something in a different city that I have not been in the past (anomalous behavior). The problem is the banks can’t view my calendar to see that I had a trip planned to that city, or that I bought airline tickets for that city. However, inside of a company, getting access to calendar information and other types of data like that can help these systems limit false positives.

Microsoft’s new AI capabilities with the Azure Security Center look like this is the most promising development in this area.

Another vendor that has something that is going in the right direction with their Netwrix Auditor which allows them to detect and investigate abnormal user behavior. Which this sounds a lot like what I am talking about minus the AI that is needed for what I am driving for. Here is a short video demo of Netrix at https://vimeo.com/190103829/1b01564715.

Comments